Chapter-2: An Introduction to the CF Conventions#

Why should we adopt a metadata standard?#

In the earth system science (ESS) community, huge amount of data are generated, exchanged, and consumed. It is often a time consuming task for researchers to discover from an enormous pool of data the right dataset they want. Meanwhile, it is also challenging for data providers to bring their data products to users. To meet this challenge in today’s research ecosystem, it is a highly recommended practice to enrich and standardize metadata of research datasets. By doing this, you’re making your data more findable, interoperable, and reusable by other stakeholders in the community, thus contributing to the achievement of the FAIR principle of open science in a broader sense.

A metadata standard is a set of rules or guidelines that defines how metadata should be structured, described, and managed. It specifies the elements or attributes to be included, the semantics of those elements, and often the syntax or format in which the metadata should be encoded.

The CF Conventions is becoming a widely adopted metadata standard for netCDF data format. It is the successor of the COARDS Conventions, and is characterised by its flexibility and campatibility with other metadata standards. Broadly speaking, the CF Conventions does two important things:

Providing standards for attributes: What kind of attributes should be included in netCDFs?

Giving recommendations on data structure in netCDF for different types of data (e.g. grid data, discret points etc.)

1. Standard Attributes in netCDF#

According to the CF Conventions[2] (by the time of making this chapter, the most recent version of the CF Conventions is 1.11), some attributes are required while the others are optional. However, it is certainly recommended to enrich the attributes of each variable, as well as the entire dataset, as good as possible. Here, we will use the same dataset[1] of sea surface temperature as an example like in the previous chapter.

xarray.Dataset {

dimensions:

lon = 180 ;

bnds = 2 ;

lat = 170 ;

time = 24 ;

variables:

float64 lon(lon) ;

lon:standard_name = longitude ;

lon:long_name = longitude ;

lon:units = degrees_east ;

lon:axis = X ;

lon:bounds = lon_bnds ;

lon:original_units = degrees_east ;

float64 lon_bnds(lon, bnds) ;

float64 lat(lat) ;

lat:standard_name = latitude ;

lat:long_name = latitude ;

lat:units = degrees_north ;

lat:axis = Y ;

lat:bounds = lat_bnds ;

lat:original_units = degrees_north ;

float64 lat_bnds(lat, bnds) ;

float64 time(time) ;

time:standard_name = time ;

time:long_name = time ;

time:units = days since 2001-1-1 ;

time:axis = T ;

time:calendar = 360_day ;

time:bounds = time_bnds ;

time:original_units = seconds since 2001-1-1 ;

float64 time_bnds(time, bnds) ;

float32 tos(time, lat, lon) ;

tos:standard_name = sea_surface_temperature ;

tos:long_name = Sea Surface Temperature ;

tos:units = K ;

tos:cell_methods = time: mean (interval: 30 minutes) ;

tos:_FillValue = 1.0000000200408773e+20 ;

tos:missing_value = 1.0000000200408773e+20 ;

tos:original_name = sosstsst ;

tos:original_units = degC ;

tos:history = At 16:37:23 on 01/11/2005: CMOR altered the data in the following ways: added 2.73150E+02 to yield output units; Cyclical dimension was output starting at a different lon; ;

// global attributes:

:title = IPSL model output prepared for IPCC Fourth Assessment SRES A2 experiment ;

:institution = IPSL (Institut Pierre Simon Laplace, Paris, France) ;

:source = IPSL-CM4_v1 (2003) : atmosphere : LMDZ (IPSL-CM4_IPCC, 96x71x19) ; ocean ORCA2 (ipsl_cm4_v1_8, 2x2L31); sea ice LIM (ipsl_cm4_v ;

:contact = Sebastien Denvil, sebastien.denvil@ipsl.jussieu.fr ;

:project_id = IPCC Fourth Assessment ;

:table_id = Table O1 (13 November 2004) ;

:experiment_id = SRES A2 experiment ;

:realization = 1 ;

:cmor_version = 0.9599999785423279 ;

:Conventions = CF-1.0 ;

:history = YYYY/MM/JJ: data generated; YYYY/MM/JJ+1 data transformed At 16:37:23 on 01/11/2005, CMOR rewrote data to comply with CF standards and IPCC Fourth Assessment requirements ;

:references = Dufresne et al, Journal of Climate, 2015, vol XX, p 136 ;

:comment = Test drive ;

}

1.1. Variable Attributes#

Required Attributes:

long_name/standard_name: Define the meaning of the variable. Thelong_namecan be given by dataset creators, whereas thestandard_namemust be a controlled vocabulary as defined in the “CF Standard Name Table”.E.g. in variable

tos, the long name was defined as “Sea Surface Temperature`, while another formation of the name is allowed too, as long as it makes sense, such as “sea surface temperature” or “Temperature of Sea Surface”. However, the standard name must be strictly written as “sea_surface_temperature” just like given in the standard name table.Based on the Standard Name Table, the standard name for a longitude variable is always “longitude”, for a latitude variable is “latitude”, and for a time variable is “time”.

units: The unit of the variable, should be parsable by the UDUNITS library. If a variable has astandard_name, itsunitscan be looked up in the “CF Standard Name Table” too.E.g. As defined in the Standard Name Table,

degrees_northis the unit for a latitude variable, anddegrees_eastfor a longitude variable.The

unitsof a time variable is supposed to be a string in the form of[time-interval] since YYYY-MM-DD hh:mm:ss, e.g.seconds since 2001-1-1in the example. “seconds”, “minutes”, “hours”, and “days” are the most commonly used time intervals; “months” or “years” are not recommended because the interval length may vary.The

unitsof sea surface temperature in the example is given in Kelvin (K), which is aligned with the recommendation by the “CF Standard Name Table”.

Important or Common Optional Attributes:

valid_range: Two numbers specifying the MIN and MAX valid values of a variable. Any values outside this range are treated as missing. Must not be defined if eithervalid_minorvalid_maxis defined._FillValue: Indicating missing data. It should be scalar (only one value) and outside of thevalid_range. This attribute is not allowed for coordinate variables.E.g. in variable

tos, everywhere in the 3D field where no values exist, are filled with value 1.e+20

scale_factor/add_offset: Used for unpacking data for display.

\[{unpackedData = scaleFactor * storedData + addOffset}\]actual_range: Must exactly equal to the MIN and MAX of the unpacked variable.axis: Identifies latitude, longitude, vertical or time axes. (Xfor longitude,Yfor latitude,Zfor vertical axis,Tfor time)coordinates: (This attribute exists only in data variables) It is a list of auxiliary coordinate variables (and optionally coordinate variables) separated by a blank. There is no restriction on the order in which the variable names appear in the string.Though it’s not given in the example dataset, variable

tosmay contain a coordinate attribute liketos: coordinates = lon_bnds lat_bnds time_bnds.

If the data are gridded, the attribute

cell_methodsis valuable too:cell_methods: The method by which the cell values are calculated; it’s formed in a string ofname:method.E.g.

tos:cell_methods = time: mean (interval: 30 minutes)tells that the value of sea surface temperature in every cell is a temporal mean of 30 days (As noted before, the correct time interval should be 30 days).

Note

In the example dataset, no attributes were provided to the boundary variables (lon_bnds, lat_bnds, time_bnds), that’s because boundary variables usually inherits attributes of their parent variables (lon, lat, time).

1.2. Global Attributes#

title: short description about the content of the netCDF file.Conventions: The name of the metadata standard applied to this dataset.institution: The name of the organization where the dataset is produced.source: The used method for producing the original data.references: References that describe the data or the data production method, e.g. published article.history: List of actions taken to modify the original data.If the data are discrete, the attribute

featureTypeis REQUIRED; the attribute value should be one of these options:point,timeSeries,profile,trajectory,timeSeriesProfile,trajectoryProfile.

Appendix A of the CF Conventions provides an overview of all attributes defined by this metadata standard, you can find more information about attributes in the documentation. It should be noted that including attributes that are not specified in the CF Conventions does not make a dataset incompatible with the CF Conventions.

2. File Structure Recommendations for Diverse Data Types#

Data in a netCDF file can be presented in two major forms, gridded or as discrete geometry samples (DSGs). Though the interpretation of gridded data may vary depending on its coordinate system, the ways how gridded data are arranged in netCDF files are quite similar. The example that we presented above has a representative structure of gridded data, where multiple layers of grids representing different variables (coordinate variable, auxiliary coordinate variable, data variable) are overlaid upon each other, thus overlaid cells share same coordinates. Data like images captured by earth observation satellites, climate model outputs are usually gridded data. However, the arrangement of DSG data in netCDFs can be very diverse, depending on the type as well as the length of DSG features.

In the CF Conventions, DSG features are divided into the following types:

Point: Unconnected points / stations, each point / station only contains a single element (e.g. Earthquake data, Lightning data).

Time Series: Data are taken over periods of time for a single station (e.g. Weather station data, Fixed buoys).

Profile: Data are taken along a vertical line at a single station (e.g. Atmospheric profiles from satellites).

Trajectory: Data are taken along a spatial path at different times, each trajectory contains a set of connected points (e.g. Cruise data, drifting buoys).

Combined DSG:

Timeseries of Profiles: Profiles taken over periods of time for a fixed station.

Trajectory of Profiles: A collection of profiles along a trajectory (e.g. Ship soundings).

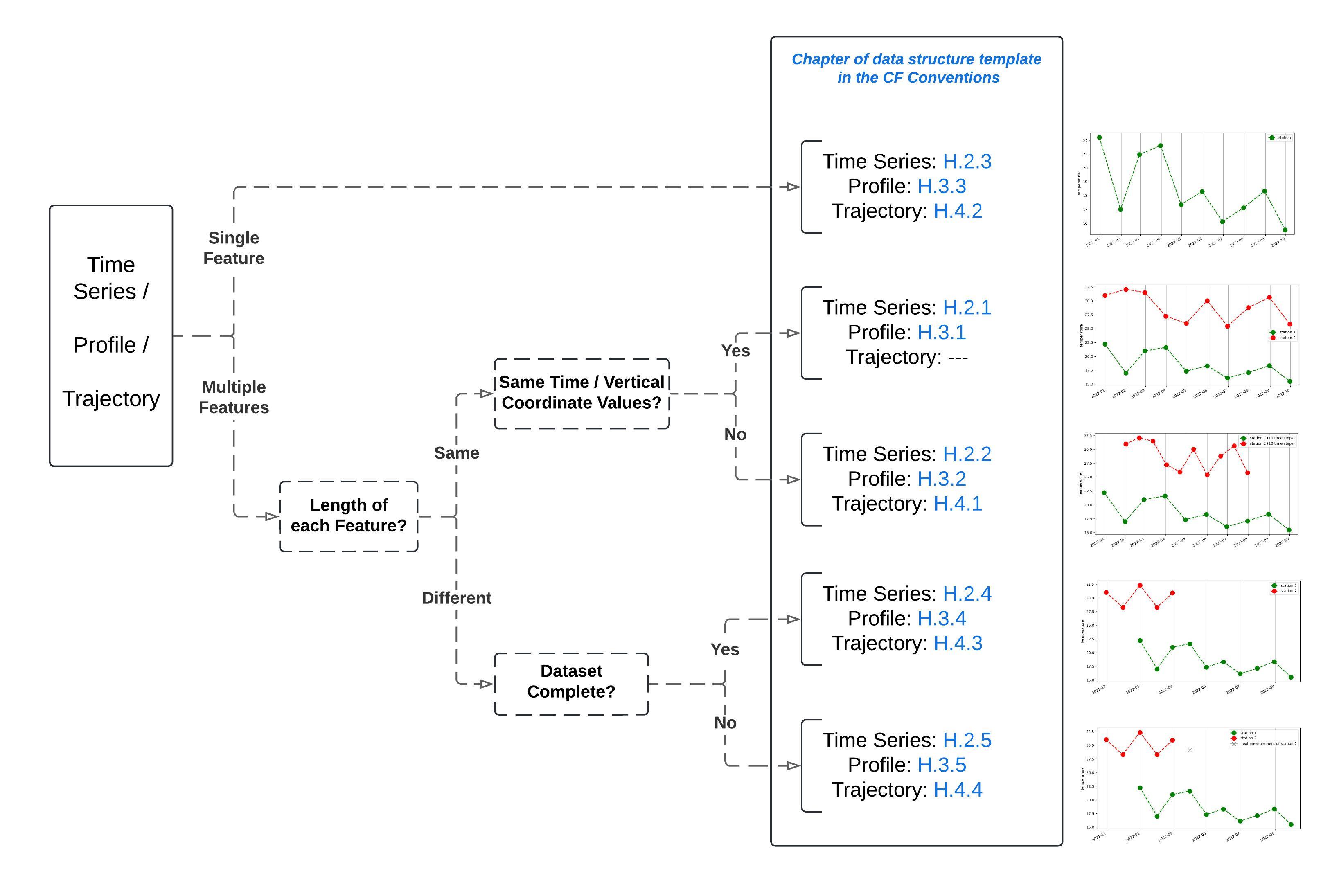

The Appendix H of the CF Conventions provides concrete recommendations on the data arrangement of various DSG data. By following the roadmap shown below, you could find the recommended netCDF file structure for the specific type of DSG data you have. In chapter 4, we will walk you through the procedure of creating a netCDF file for every type of time series data as suggested by the CF Conventions. In chapter 5, chapter 6 and chapter 7, we have prepared examples to elaborate on this procedure for other types of DSG data (profile, trajectory, complex DSG), along with exercises with sample solutions so that you can practice creating standard netCDFs file on your own.

Fig. 1 Navigation to the proper netCDF structure of DSG data.#